1.2. What is Machine Vision¶

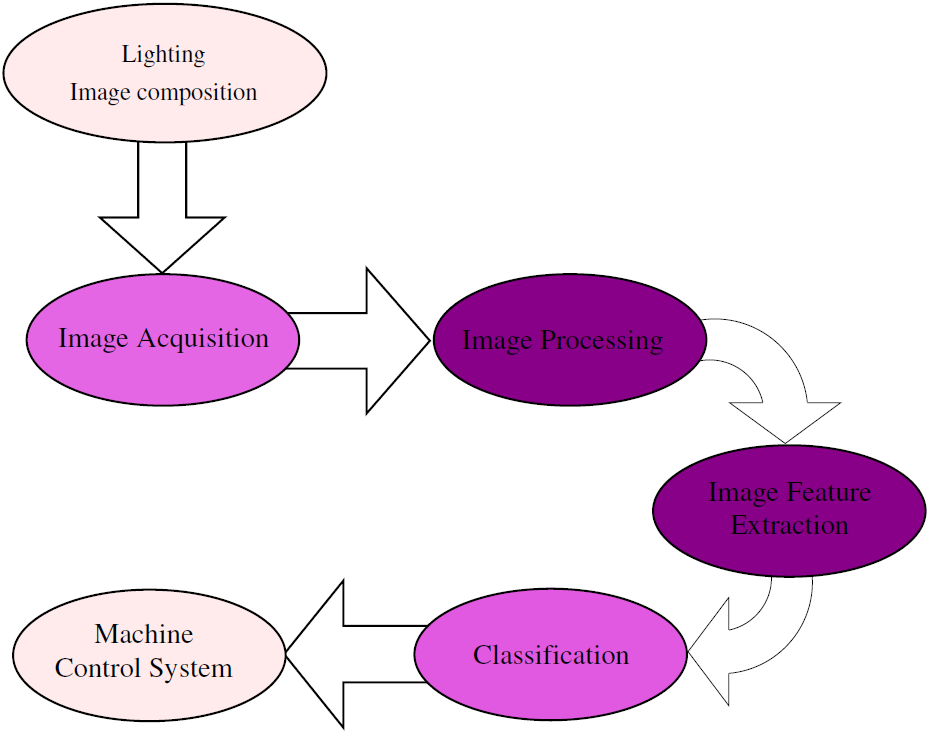

Machine vision systems analyse images from cameras to generate image feature data that guides robotic and automation machines in their understanding of the physical world depicted in the image. Vision is a sensory input capable of producing detailed data that in many instances could only be obtained by means of vision. The machine vision system is needed to take the vast amount of data contained in images and measure features of the image content that can be used directly.

Machine vision uses image processing, but the two terms are not the same. Image processing generates new images from existing images. Image processing is used in the early machine vision stages for tasks such as filtering (Neighborhood (Spatial) Processing), Segmentation, Edge Detection, and Geometric Operations. Not all image processing algorithms are typically used in machine vision systems. Examples of image processing algorithms that are of secondary concern to machine vision include de-blurring, image stitching, and image and video compression.

Two other related terms in common usage, computer vision and robotic vision, have essentially the same meaning as machine vision and primarily differ in usage only in the intended application. One might use the term computer vision in relation to a system that does not control external machines. For example, face recognition for security verification would typically be labeled as a computer vision application. The term robot vision is used in connection with machines that are specifically robots and would not include the machine vision topic of quality control inspections. Thus robot vision can be considered a subset topic to machine vision.

A few other technologies are also used in connection with machine vision systems. These include pattern classification, machine learning, artificial intelligence, and machine control systems.

1.3. Applications of Machine Vision¶

Vision systems are very common sensory input to robotic and automation systems.

- In factories they can be used for simple, but important, applications such as detecting the presence of a product at a desired location.

- Perhaps the most common application of machine vision is for quality control inspections.

- Robotic systems can use vision as input to its guidance system, not only to avoid obstacles but also for Visual Servoing where the vision system guides the robot towards a goal.

Note

The Phillips Lighting factory previously in Salina used vision systems to inspect and measure the size and shape of the glass light bulb tubes at every step of the manufacturing process.

1.4. Environment¶

1.4.1. Software¶

There are primarily two software environments used to study and develop vision systems in both industry and academia.

OpenCV (Open Source Computer Vision Library) is a C/C++ library of vision processing routines that are commonly used with C++ and Python programs (Java is also supported).

For exploration and research of vision processing, MATLAB is a very popular environment. The overall capabilities of MATLAB, especially the vector, matrix, and visualization features, make it a very convenient environment to explore various vision processing algorithms. To support development of real time vision systems, MATLAB programs can be converted to C/C++ code and also integrated with the OpenCV libraries if needed.

We will use MATLAB along with three Toolboxes, which provide specific functions and classes needed for vision processing. A nice benefit of the toolboxes is the ability to view the source code, which can often help one to understand the algorithms and also provide examples of good coding practices. Two of the toolboxes are open source contributions from Peter Corke, who is a professor at Queensland University of Technology and is the director of the Australian Centre of Excellence for Robotic Vision.

-

MathWorks Image Processing Toolbox (IPT) The add-on IPT toolbox from MathWorks is somewhat the default toolbox for image processing work. The functions in the toolbox have very good performance and are well documented in the MATLAB help system and in several books.

-

Machine Vision Toolbox (MVTB) Peter Corke has developed a nice open source collection of functions and classes for machine vision. Some of the MVTB functions are duplicate with functions in the IPT, but it does provide some unique capabilites and the redundant MVTB functions often provide cleaver features not in the IPT. The MVTB has documentation available from the MATLAB help system and is demonstrated in the author’s book and a number of videos (see below).

-

Spatial Math Toolbox (SMTB) This open sourse toolbox, also from Peter Corke, provides functions and objects useful for geometric transformations of pixels in an image.

1.4.2. Books¶

We will primarily reference two books in this course. In general, the course will focus mostly on the first book at the beginning of the semester and shift the focus more to the second book in the later part of the semester.

-

Practical Image and Video Processing Using MATLAB [PIVP] by Oge Marques is exactly what the title says. It is a very practical (applied and easy to follow) introduction to image processing. The book uses MathWorks IPT for all example code. A nice learning feature of the book is that the chapters have tutorials where students run given MATLAB code and answer questions about the observed results. Since the code is given, it helps students to learn by example and to focus on what is happening rather than the coding details. This is the only book used in the first two times that the course was offered (Spring 2016 and Spring 2017). The only issues that I have with the book is that the objective of the book is to teach image processing, not machine vision, and coverage of some topics is more drawn out (slow) than what I feel that time allows in order to cover everything desired in this course.

The book may be purchased, or individual chapters may be downloaded from IEEE Xplore. K-State students, if you are on campus, or using the full tunnel VPN, you may download the chapters for free because of our library’s subscription. See Canvas for more resources from the book.

1.4.3. Video Resources¶

There are many video lectures and demonstrations online on the topic of vision systems. I find two video resources especially good and relevant to this class.

- Introduction to Computer Vision was a Udacity MOOC by Aaron Bobick. Professor Bobick is now at Washington University in St. Louis, but when he was at Georgia Tech, he recorded the lectures for this course. The course seems to no longer be offered from Udacity, but there is a YouTube Playlist with the lectures. The lectures are very good at explaining the basic concepts of how algorithms work.

- QUT Robot Academy contains various lectures and demonstrations by Peter Corke. The lectures are mostly taken from his series of MOOCs on Introduction to Robotics and Robotic Vision. Of course, we will focus on the Robotic Vision videos. These videos contain slightly less descriptions of algorithms, but show practical examples of using implementations of the algorithms contained in the MVTB. These videos are a nice complement to his book, [RVC].