16. Visual Servoing¶

Reading Assignment

Please read chapter 15 of [RVC].

Video Resource

- Dr. Corke’s video series on Visual Servoing.

- This video shows an example of PBVS on a 6-DOF robot arm.

- This video shows an example of IBVS on a 6-DOF robot arm.

Visual servo control refers to the use of vision data to control the motion of a robot. The vision data may be acquired from a camera that is mounted directly on a robot manipulator or on a mobile robot, in which case motion of the robot induces camera motion, or the camera can be fixed in the workspace so that it can observe the robot motion from a stationary configuration. The focus here is on the former, so-called eye-in-hand.

To set the basic context for how visual servoing is accomplished, refer to Camera Matrix. The pose of the camera constitutes the extrinsic parameters of the camera matrix. Using the camera matrix, the camera pose, and the location of fixed objects in an image allows us to calculate the location of where the objects should appear in the image. When the camera (attached to a robot) is moved, the objects in the image will move. Thus by taking the derivatives of the motion, we can relate the velocity of the camera to the velocity of the objects in the image. This relationship is called the image Jacobian matrix. Jacobian matrices are widely used in mechanical engineering to relate how the velocity of one moving object affects the velocity of the whole system or another object. In this case, the Jacobian matrix relates the velocity of a camera to the velocity of fixed objects in the images from the camera.

In visual servoing, we use a pattern of usually three or four objects. We want to move the camera such that the pattern in the images matches a desired location and orientation. The error between a current image pattern and the desired image pattern and the matrix inverse of the image Jacobian matrix tells us how to change the camera pose to align the image pattern with the desired image pattern.

This is a very interesting and also difficult topic. Dr. Corke is able to explain this topic much better than I can. In class we will watch videos 3 through 7 of his visual servoing series.

As a quick point of reference, there are two general approaches to eye-in-hand visual servoing.

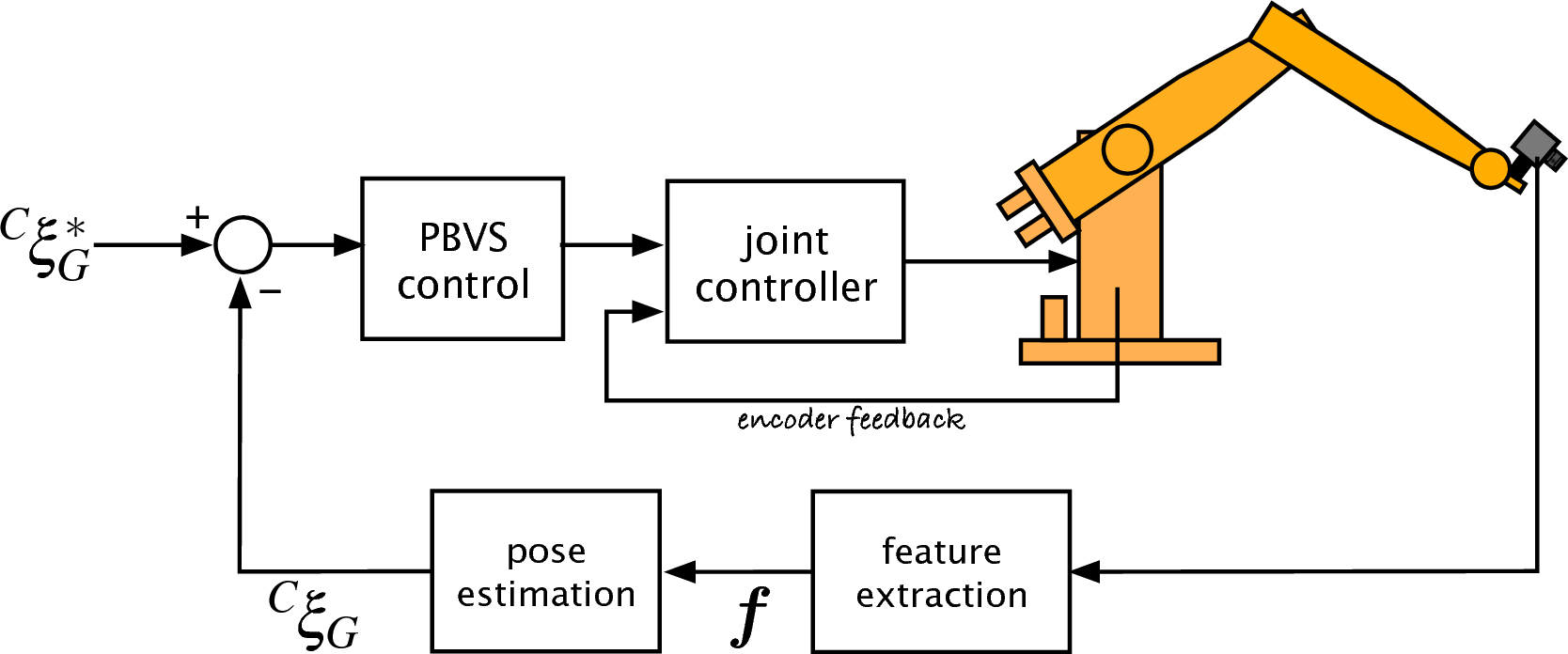

Figure 15.2a from [RVC] – Position Based Visual Control. The features from the image, known goal geometry, and the camera pose are used to estimate the pose of the goal.

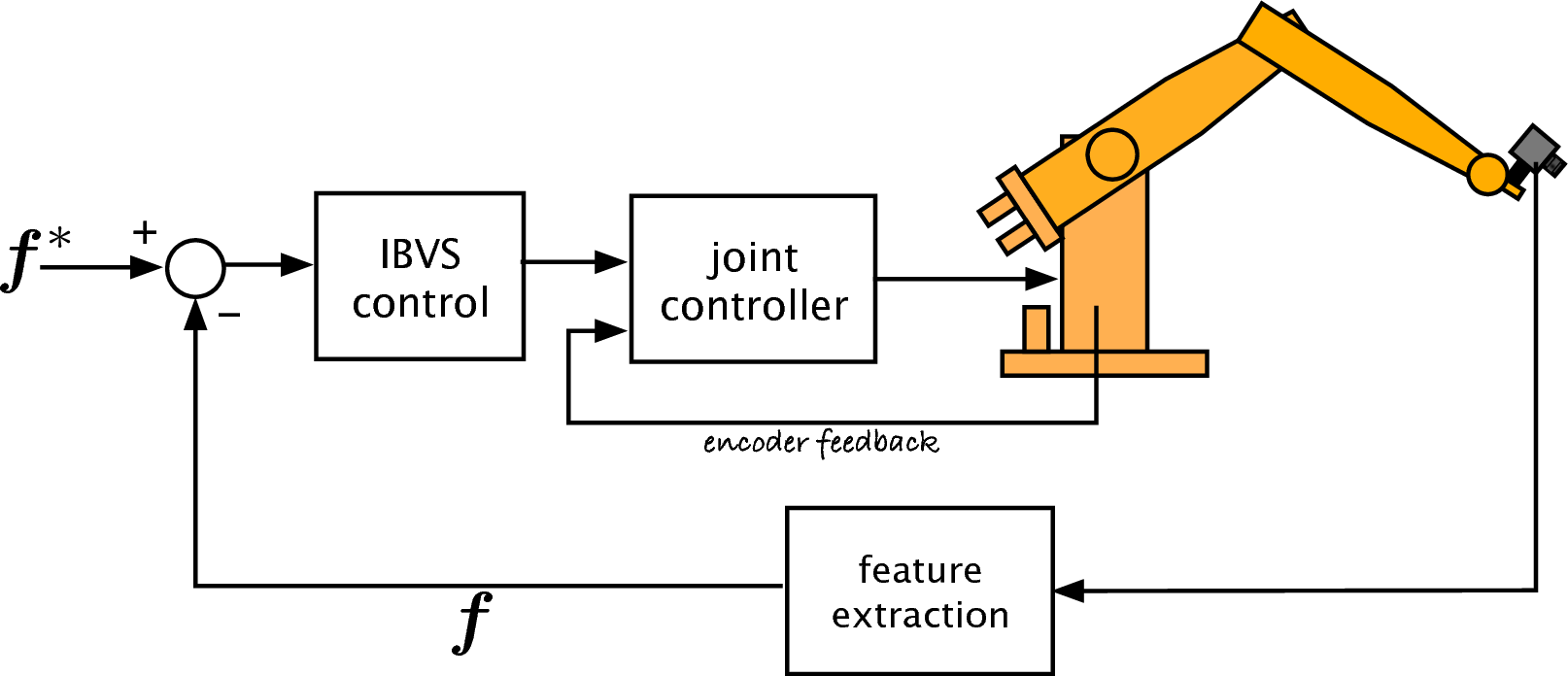

Figure 15.2b from [RVC] – Image Based Visual Control. The pose of the goal is not estimated, instead we use the features of the current camera image along with the features of an image when the camera has the desired pose to calculate the camera movement needed to achieve the desired features.